- Home

- >

- Cloud & AI

- >

- TDMQ for CKafka

- >

TDMQ for CKafka

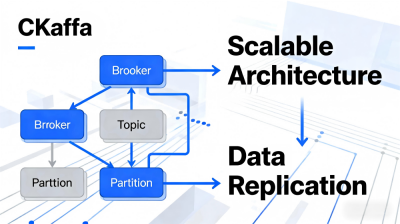

2025-12-12 16:24TDMQ for CKafka is a distributed, high-throughput, and highly scalable messaging system that is 100% Apache Kafka Compatible, supporting versions 0.9 to 2.8. Based on the publish/subscribe model, CKafka decouples messages, enabling asynchronous interaction between producers and consumers without requiring mutual waiting. CKafka offers advantages such as high availability, data compression, and support for both offline and Real-Time Data processing, making it suitable for scenarios like log compaction and collection, monitoring data aggregation, and streaming data integration. In terms of core capabilities, CKafka supports deep integration with Big Data Suite (e.g., EMR, Spark) to build comprehensive data processing pipelines. Leveraging its highly reliable distributed deployment and scalability, CKafka enables horizontal cluster expansion and seamless instance upgrades, with the underlying system automatically elastically scaling to match business needs. In key scenarios, as a critical component of data inflow, Log Collection efficiently aggregates log data through client agents, providing a stable data source for stream data processing. In Stream Data Processing scenarios, combined with services like Stream Compute SCS, it enables real-time data analysis, anomaly detection, and offline data reprocessing, fully unlocking data value. The compatibility with Apache Kafka lowers the barrier to entry for users, while the deep adaptation to real-time and stream data processing, the collaborative empowerment with Big Data Suite, and the efficient support for Log Collection make CKafka a core platform for enterprise data flow and value extraction.

Frequently Asked Questions

Q: Tencent Cloud CKafka is 100% Apache Kafka Compatible. What practical value does this feature bring to Stream Data Processing and Real-Time Data scenarios?

A: Tencent Cloud CKafka is fully compatible with Apache Kafka versions 0.9 to 2.8, providing critical support for Stream Data Processing and Real-Time Data scenarios. In Stream Data Processing scenarios, compatibility with Apache Kafka means users can seamlessly migrate existing Kafka-based stream processing logic to the CKafka platform without modifications. They can also directly reuse mature components like Kafka Streams and Kafka Connect. Combined with the integration between CKafka and Stream Compute SCS, this enables efficient collaboration for real-time data analysis, anomaly detection, and offline data processing, reducing business migration and transformation costs. In Real-Time Data scenarios, compatibility with Apache Kafka allows users to continue using familiar development patterns and toolchains, quickly integrating real-time monitoring data and business data. CKafka's distributed, high-throughput nature ensures efficient reception and transmission of real-time data, preventing data backlogs. Additionally, leveraging the ecosystem advantages brought by compatibility, CKafka can be quickly integrated with Big Data Suite for immediate analysis and value extraction of real-time data. The Apache Kafka compatibility feature makes the implementation of Stream Data Processing and Real-Time Data scenarios smoother and more efficient, fully protecting users' existing technical investments.

Q: How does Tencent Cloud CKafka provide data support for Big Data Suite through Log Collection, and how do the two work together in Stream Data Processing?

A: Tencent Cloud CKafka provides a stable data source for Big Data Suite through its efficient Log Collection capability: By deploying client agent components, CKafka can comprehensively collect various types of log data, including application runtime logs and operational behavior logs. After aggregation, the data is uniformly sent to the CKafka cluster, ensuring the completeness and real-time nature of log data and providing high-quality input for Big Data Suite's analysis and processing. In Stream Data Processing, CKafka and Big Data Suite work together closely and efficiently: First, the massive data collected through Log Collection is stored in CKafka. The Big Data Suite (e.g., Spark in EMR) can consume data from CKafka in batches for offline analysis and reprocessing, generating trend reports. At the same time, CKafka supports real-time data pushing, allowing the Big Data Suite to read streaming data in real-time and work with stream computing services to perform real-time data analysis and anomaly detection, quickly identifying system issues. Log Collection serves as the starting point of data flow, and its efficiency ensures the data source supply for the Big Data Suite. The collaboration between the two in Stream Data Processing achieves full-scenario coverage of real-time and offline data, enabling the full extraction of data value.

Q: In Real-Time Data processing scenarios, what are the advantages of combining Tencent Cloud CKafka with the Big Data Suite, and how does the Apache Kafka compatibility feature facilitate the connection between Log Collection and Stream Data Processing?

A: In Real-Time Data processing scenarios, the combination of Tencent Cloud CKafka and the Big Data Suite offers significant advantages: CKafka features high throughput and low latency, enabling the rapid reception of massive real-time data, while the Big Data Suite (e.g., Spark, EMR) provides powerful computing capabilities for immediate analysis, cleaning, and value extraction of real-time data. It also supports offline data storage and reprocessing, meeting diverse needs such as real-time monitoring and trend analysis. Additionally, the one-click deployment of data flow pipelines between CKafka and the Big Data Suite significantly reduces system setup and maintenance costs. The Apache Kafka compatibility feature facilitates a smoother connection between Log Collection and Stream Data Processing: During the Log Collection phase, leveraging the Apache Kafka-compatible client ecosystem, users can directly use mature log collection tools (e.g., Fluentd) to integrate with CKafka without developing additional adaptation plugins, ensuring efficient and stable Log Collection. During the Stream Data Processing phase, the compatibility feature allows CKafka to seamlessly integrate with Kafka protocol-based Stream Data Processing components, enabling smooth end-to-end data flow from Log Collection, transmission, to processing. This avoids compatibility issues during data transmission and ensures the continuity and efficiency of Stream Data Processing.