- Home

- >

- Cloud & AI

- >

- Batch Compute

- >

Batch Compute

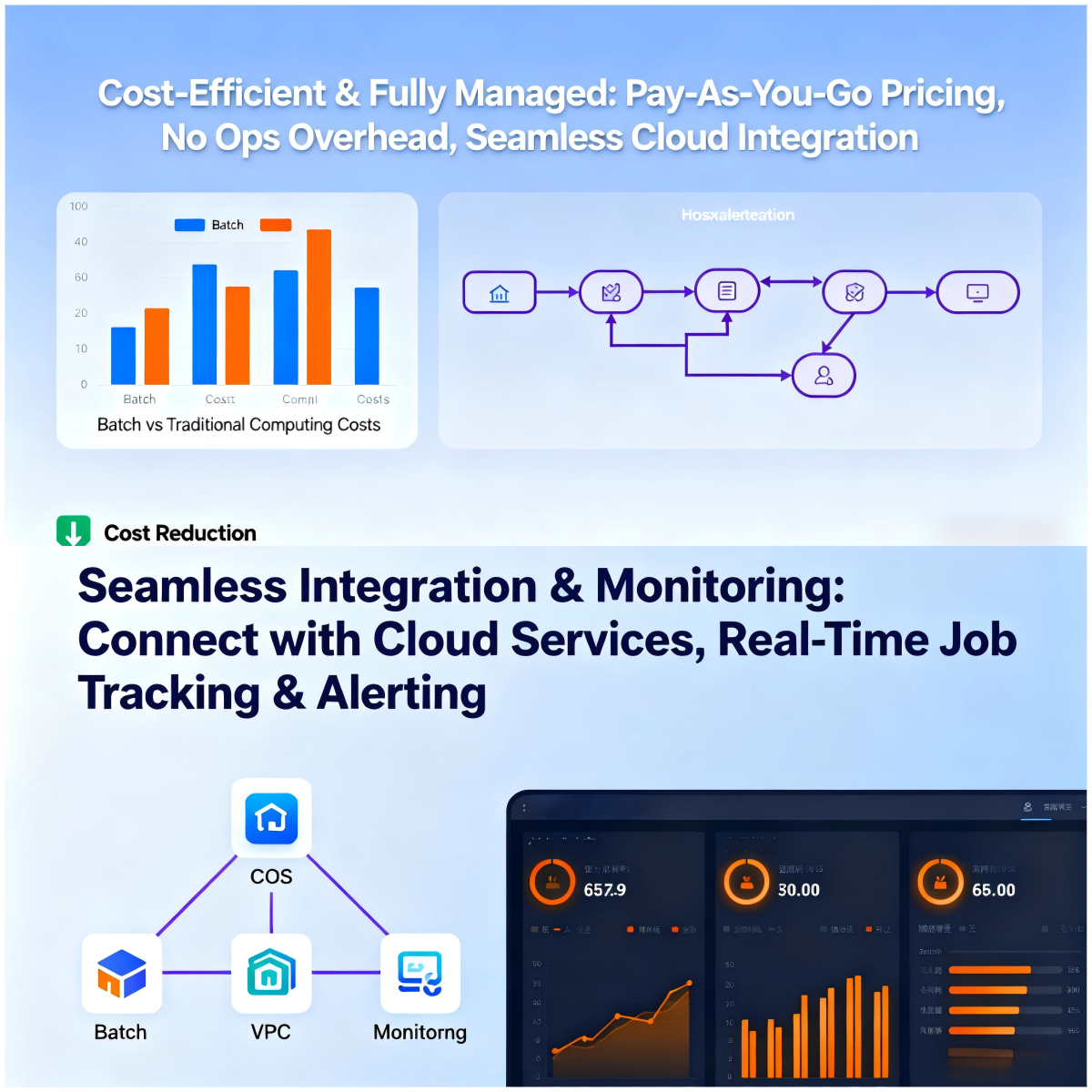

2025-12-04 17:23Tencent Cloud Batch Computing (Batch) is a low-cost distributed computing platform provided for enterprises and research institutions. Its core focus is on batch data processing needs. Whether it's Big Data Batch Processing, Batch Processing for ML Training, or Batch Video Rendering, it can provide efficient and stable computational support through intelligent resource scheduling and fully managed end-to-end services. As a core tool for Batch Data Processing, Batch Computing supports dynamic configuration of computing resources, enabling elastic scaling to handle Big Data Batch Processing tasks of different scales. Its zero upfront cost feature significantly lowers the barrier to entry for enterprises. For Batch Processing for ML Training, it supports multi-instance concurrency and task dependency modeling, enabling rapid setup of distributed training environments and accelerating model iteration. In Batch Video Rendering scenarios, Batch Computing can build automated rendering pipelines. Leveraging massive resources and job scheduling capabilities, it efficiently completes Batch Data Processing for visual creation work. Batch Computing deeply integrates with cloud services like Object Storage (COS), achieving a one-stop closed loop from data acquisition, computing execution, to result storage. This allows users to focus on core data processing and analysis without worrying about resource management and environment deployment, making it the preferred solution for scenarios like Big Data Batch Processing, Batch Processing for ML Training, and Batch Video Rendering.

Frequently Asked Questions

Q: As a core platform for Batch Data Processing, how does Batch Computing simultaneously and efficiently support the two distinct needs of Big Data Batch Processing and Batch Video Rendering?

A: Batch Computing, with its flexible resource scheduling and fully managed end-to-end capabilities, perfectly adapts to these two types of Batch Data Processing needs. For Big Data Batch Processing, it supports dynamic and elastic scaling of computing resources, combined with storage mounting functions to enable fast access to massive datasets, meeting the high-concurrency demands of TB/PB-level Big Data Batch Processing. For Batch Video Rendering, Batch Computing can use DAG workflow editing to build rendering dependency pipelines, paired with multi-instance concurrent execution, efficiently advancing large-scale rendering tasks. Meanwhile, the fully managed nature of Batch Computing means that both types of Batch Data Processing require no manual intervention in resource creation and destruction. Whether it's the complex data operations of Big Data Batch Processing or the compute-intensive tasks of Batch Video Rendering, they can be completed with low cost and high efficiency, fully realizing the core value of Batch Computing.

Q: What are the core advantages of choosing Batch Computing for Batch Processing for ML Training? Can it also meet the efficiency requirements of Big Data Batch Processing?

A: The core advantages of choosing Batch Computing for Batch Processing for ML Training are reflected in three points: First, it supports task dependency modeling, allowing flexible orchestration of training workflows to adapt to the multi-stage needs of Batch Processing for ML Training. Second, its elastic resource scaling can dynamically adjust the number of instances based on the scale of the training task, avoiding resource waste. Third, its deep integration with cloud storage facilitates access to training data and model files. At the same time, these advantages can also fully meet the efficiency requirements of Big Data Batch Processing — the multi-instance concurrency capability of Batch Computing can enhance the processing speed of Big Data Batch Processing, and its storage mounting function ensures efficient access to massive datasets. This makes Batch Computing an all-in-one platform capable of supporting both Batch Processing for ML Training and Big Data Batch Processing, further highlighting the versatility of its Batch Data Processing capabilities.

Q: When enterprises conduct both Batch Video Rendering and Big Data Batch Processing, how can they achieve cost optimization and process simplification through Batch Computing?

A: Batch Computing helps enterprises optimize costs and simplify processes through a dual mechanism. Regarding cost, Batch Computing supports pay-as-you-go billing, creating CVM instances only during Batch Data Processing and automatically destroying them after tasks finish. This zero upfront cost reduces the basic expenses for both Big Data Batch Processing and Batch Video Rendering. Simultaneously, dynamic resource configuration ensures resources precisely match task demands, avoiding idle waste. Regarding processes, Batch Computing provides a sophisticated task definition function, enabling quick configuration of computing environments and execution commands without manual deployment. For the pipeline needs of Batch Video Rendering and the complex workflows of Big Data Batch Processing, its DAG workflow editing and task dependency modeling functions enable full-process automation. Combined with the public command library and API integration capabilities, it simplifies the entire Batch Data Processing journey from task submission to result output. Whether for Batch Processing for ML Training or other batch computing scenarios, it can be implemented efficiently.